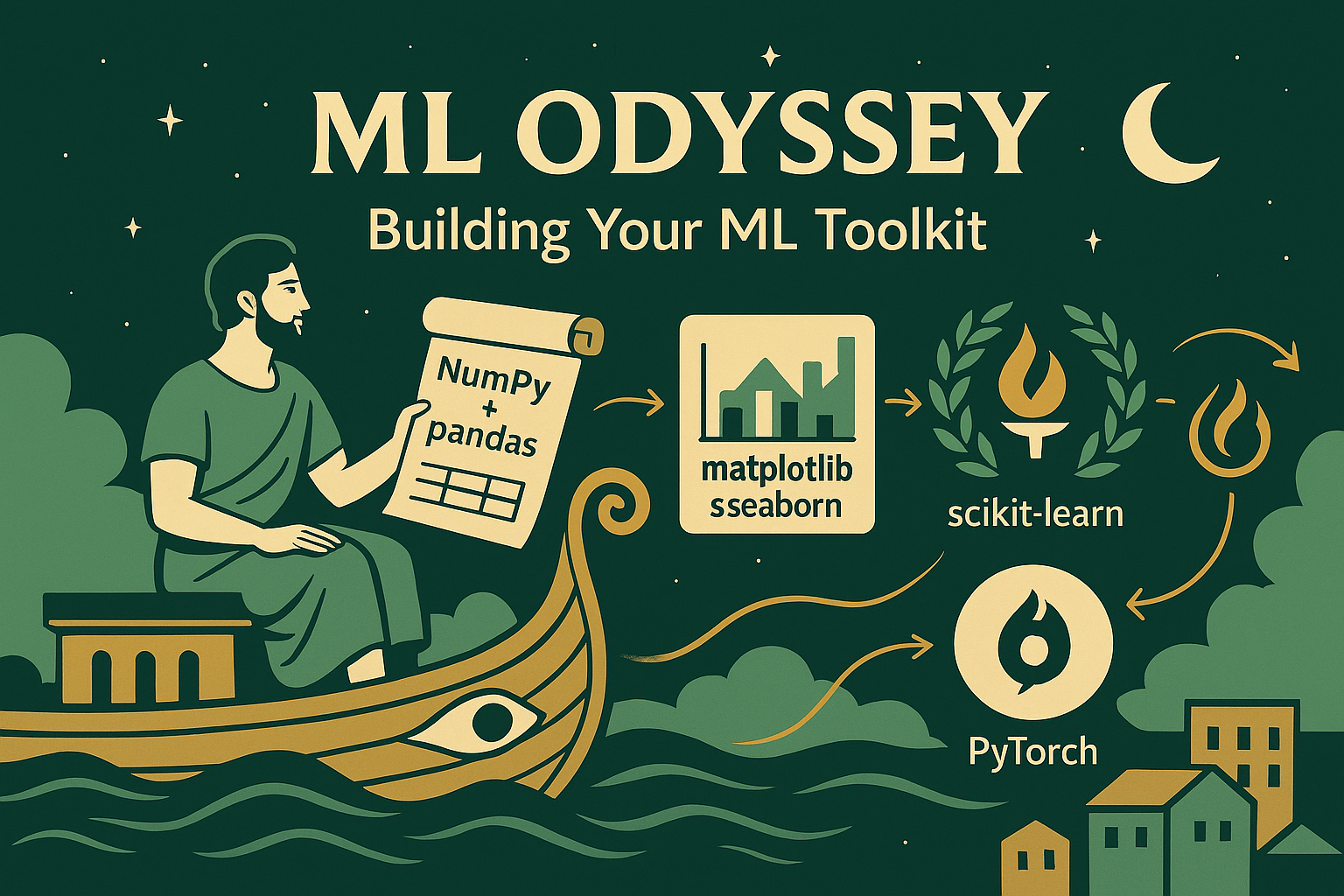

ML Odyssey: Part 1 - Building Your ML Toolkit

As someone who has recently embarked on the exciting journey of Machine Learning, I’ve discovered that success in ML isn’t just about choosing one framework—it’s about building a comprehensive toolkit that covers every stage of the ML pipeline. This series, “ML Odyssey,” documents my learning journey and the strategic decisions behind our tool choices.

The Complete ML Pipeline

Machine learning is much more than just training models. It’s a comprehensive process that requires the right tools at each stage:

Data Processing

NumPy & Pandas

Data Visualization

Matplotlib & Seaborn

Traditional ML

Scikit-learn

Deep Learning

PyTorch

📚 Learning Progression: This sequence follows the natural ML learning path—master data fundamentals first, then traditional algorithms, and finally advance to neural networks when you need that extra power!

Let’s explore why we’ve chosen each tool and how they work together to create a powerful ML workflow.

1. Data Processing: The Foundation

Every successful ML project starts with clean, well-structured data. For this critical stage, we’ve chosen two powerhouse libraries:

- High-performance array operations and mathematical functions

- The backbone that powers pandas, PyTorch, and most ML libraries

- Essential for efficient numerical computations

- Intuitive data structures for labeled, heterogeneous data

- Powerful data manipulation and analysis capabilities

- Seamless integration with visualization and ML libraries

🎯 Why This Combination Works: NumPy provides the computational engine, while pandas adds the convenience layer for real-world data operations. Together, they handle everything from loading CSV files to complex data transformations—the essential foundation for any ML pipeline.

| Feature | NumPy | Pandas |

|---|---|---|

| Primary Use | ✅ Numerical computations | ✅ Data manipulation & analysis |

| Data Types | 🔄 Homogeneous arrays | ✅ Mixed data types, missing values |

| Performance | ✅ Extremely fast | 🔄 Fast for most operations |

| Learning Curve | 🔄 Steeper for beginners | ✅ More intuitive API |

2. Data Visualization: Seeing Your Data

Understanding your data through visualization is crucial before building any ML model. Our visualization toolkit combines flexibility with beauty:

- Complete control over every aspect of your plots

- Publication-quality figures and scientific visualizations

- The foundation that powers most Python plotting libraries

- Modern, attractive statistical graphics out of the box

- Perfect integration with pandas DataFrames

- Complex statistical visualizations with simple commands

📊 Visualization Strategy: Matplotlib provides the canvas and fine control, while Seaborn adds statistical insight and modern aesthetics. This combination lets you create both quick exploratory plots and publication-ready figures.

3. Traditional Machine Learning: Scikit-learn

Before diving into neural networks, it’s essential to master traditional ML algorithms. Scikit-learn is your perfect starting point. Perfect for when you don’t need the complexity of deep learning:

- 📈 Traditional Algorithms: Comprehensive collection of regression, classification, and clustering algorithms

- 🛠️ Data Processing: Robust preprocessing, feature selection, and model evaluation tools

- 🧪 Easy Integration: Seamless workflow with NumPy and pandas

- 🖥️ Learning-Focused: Excellent for understanding ML fundamentals and baselines

Many real-world problems are solved more effectively with traditional ML than deep learning!

4. Deep Learning: When You Need Neural Networks

Once you’ve mastered traditional ML, PyTorch opens the door to neural networks and deep learning:

- 🔥 Dynamic Computation Graphs: Build and modify neural networks on the fly, making experimentation easy

- 🐍 Pythonic and Intuitive: Designed to feel natural for Python developers, with clear and readable code

- 🚀 From Research to Production: Widely adopted in academia and industry, with tools for deployment and scaling

- 🧩 Rich Ecosystem: Extensive libraries for computer vision, NLP, audio, and more (e.g., TorchVision, TorchText)

- 🔎 Debugging Made Easy: Leverage Python debugging tools and interactive development with Jupyter notebooks

PyTorch’s approach to machine learning feels natural to Python developers, making it an excellent choice for both beginners and experts.

PyTorch vs TensorFlow: The Popularity Trend

Here’s how PyTorch has been gaining momentum against TensorFlow over recent years:

Source: Google Trends

When to Use Traditional ML vs Deep Learning

Understanding when to use each approach is crucial for ML success:

| Aspect | Traditional ML (Scikit-learn) | Deep Learning (PyTorch) |

|---|---|---|

| Best For | ✅ Tabular data, small datasets, interpretability | ✅ Images, text, large datasets, complex patterns |

| Data Requirements | ✅ Works well with small datasets (100s-1000s) | 🔄 Needs large datasets (1000s-millions) |

| Training Time | ✅ Fast training, quick iterations | 🔄 Longer training, requires patience |

| Interpretability | ✅ Easy to understand and explain | 🔄 “Black box” behavior |

| Learning Curve | ✅ Gentle, mathematical concepts | 🔄 Steeper, many hyperparameters |

| When to Start | ✅ Start here - build fundamentals | 🔄 After mastering traditional ML |

Our Learning-First Approach

- 🏗️ Build Strong Foundations: Start with scikit-learn to understand core ML concepts

- 📊 Master Data Fundamentals: Learn how algorithms work before neural networks abstract them away

- 🎯 Traditional ML First: Many problems are solved better without deep learning

- 🧠 Graduate to Neural Networks: PyTorch becomes intuitive after understanding ML fundamentals

- 🔄 Use Both Together: Scikit-learn for preprocessing/baselines, PyTorch for complex patterns

What’s Coming in This Series?

Our ML Odyssey will take you through practical, hands-on projects using this complete toolkit:

📖 Legend - Understanding the Visual Guide

Published & Ready

Green border - Available to read

Work in Progress

Grey border - Coming soon

Content Type

📚 Theory | 🛠️ Practice

Part 2: Data Processing & Visualization

Master pandas, matplotlib, and seaborn for data manipulation and visualization

Part 3: Statistical Methods for EDA

Understanding statistical concepts and analytical techniques for data exploration

Part 4: EDA - Pokemon Dataset

Hands-on Practice! Apply EDA techniques to real Pokemon data

Part 5: Traditional ML Fundamentals

Core algorithms: classification, regression, clustering theory

Part 6: Traditional ML - Pokemon

Apply Algorithms! Implement scikit-learn models

Part 7: Neural Networks Fundamentals

Deep learning concepts and framework comparisons

Part 8: PyTorch Theory

Tensors, automatic differentiation, and model optimization

Part 9: PyTorch Practice

Build Neural Networks! Hands-on deep learning implementation

Part 10: Berlin Housing Problem

Real-World Project! Regression with traditional ML and neural networks

Part 11: Bone Fractures Image Dataset

Computer Vision! Image classification with deep learning

Part 12: NLP with Scikit-learn

Text Analysis! Natural language processing fundamentals

Part 13: Web Scraping with Python

Data collection techniques and ethical scraping practices

Part 14: Kubeflow Pipelines Intro

ML pipeline orchestration and MLOps fundamentals

Part 15: Jupyter to Kubeflow

Converting notebooks to production-ready ML pipelines

Part 16: Kubeflow Pipelines Exercise

Production ML! Deploy and orchestrate ML workflows

Part 17: ML Project Management

Version control, experiment tracking, and model versioning

🎯 Learning Path: Notice how we start with data fundamentals, master traditional ML with scikit-learn, then graduate to neural networks with PyTorch. This progression ensures you understand the “why” before diving into the “how” of deep learning!

All code examples are available in our ML Odyssey repository, complete with:

- 📓 Detailed Jupyter notebooks for interactive learning

- 🚀 Production-ready deployment examples

- 🛠️ Integration guides for the complete toolkit

Setting Up Your Environment

We will use Python’s built-in venv to create isolated spaces for your project dependencies, preventing the dreaded “dependency hell” on your Fedora system. Think of them as miniature, self-contained Python installations just for your ML work!

venv is Python’s standard way to create lightweight virtual environments. It’s built right into Python 3, so you don’t need any extra installations beyond your core Python.

Create Your Virtual Environment. I like to keep it in my home directory:

1python3 -m venv ~/venvActivate the Virtual Environment. This command modifies your shell’s

PATHto point to the virtual environment’s Python andpip:1source ~/venv/bin/activateYou’ll notice

(venv)appearing at the start of your terminal prompt. This is your visual cue that you’re operating within the virtual environment. All packages you install now will live here, not globally.Install PyTorch and Dependencies. With your virtual environment activated, you can install PyTorch with the command provided in the PyTorch installation guide. For example, to install the CPU version of PyTorch, you can run:

1 2# Deep learning framework pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpuInstall the Complete Data Science Stack:

1 2 3 4 5 6 7 8 9 10 11# Data processing and visualization pip install numpy pandas matplotlib seaborn # Traditional machine learning pip install scikit-learn # Development and experimentation tools pip install jupyterlab kagglehub scipy # Spatial analysis library pip install libpysal esdaStart JupyterLab. With everything set up, launch your ML workspace:

1jupyter lab

✅ Success! You now have a complete ML toolkit ready for the entire learning journey:

- 🔢 Data Processing: NumPy & Pandas

- 📊 Visualization: Matplotlib & Seaborn

- 🤖 Traditional ML: Scikit-learn (start here for algorithms!)

- 🧠 Deep Learning: PyTorch (after mastering traditional ML)

- 💻 Development: JupyterLab

References

This series draws inspiration from excellent resources like:

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron

- “Deep Learning with PyTorch” by Stevens, Antiga, and Viehmann

- “Python for Data Analysis” by Wes McKinney

- Official documentation for all the libraries we use

Join me in this learning journey as we explore the fascinating world of machine learning with a complete, production-ready toolkit! The focus will be on practical, hands-on learning that you can apply immediately to your own projects.